Syncing Errors using Azure Blob Storage

-

We implemented JSReport on our AKS cluster. Each deployment has multiple pods all connecting to the same Azure Storage Account. This was working fine for the longest time, but as load increased we are getting these messages:

Error when syncing fs journal, no worry, we will run again

Timeout during waiting for file system, try it again later.

stack=Error: Timeout during waiting for file system, try it again later.

and

Error when cleaning fs journal, no worry, we will run again There is already a lease present.This sometimes caused errors when importing templates from one deployment to another, where templates disappeared or old versions still remained.

Is there any known cause for this and what is the recommended resolution?

-

I apologize for the trouble.

The mentioned errors signal that one pod was trying to obtain a storage lock to do some work, but it was held by another pod and waiting for getting the lock timed out.Is your blob storage account in the same cloud region as the AKS?

Did you modify the lock configs?

"extensions": { "fs-store": { "persistence": { "provider": "azure-storage" } }, "fs-store-azure-storage-persistence": { "accountName": "...", "accountKey": "...", // the rest is optional "container": "jsreport", "lock": { "retry": 100, "leaseDuration": 30, "enabled": true } } }How much time does it take to import your templates?

What jsreport and jsreport-fs-store-azure-storage-persistence do you use (versions)?Is the typical load just about rendering templates?

How many pods do you have and how many reports do you render per minute?

(just asking so I can try to replicate the issue with some load test, I don't need exact values)

-

Hi Jan,

We narrowed down the specific instances of the syncing issues. Only when we import a large export file from one instance to another is when we get locking issues.

What can we do in the configuration to help solve this?

2023-05-10T16:19:18.001Z - debug: import is reading export file

2023-05-10T16:19:19.275Z - debug: import found 414 objects

2023-05-10T16:20:13.566Z - debug: import finished

2023-05-10T16:20:16.769Z - debug: OData query on settings

2023-05-10T16:20:16.811Z - debug: OData query on settings

2023-05-10T16:20:17.067Z - debug: OData query on templates

2023-05-10T16:20:17.073Z - debug: OData query on folders

2023-05-10T16:20:17.081Z - debug: OData query on data

2023-05-10T16:20:17.085Z - debug: OData query on components

2023-05-10T16:20:17.099Z - debug: OData query on tags

2023-05-10T16:20:17.327Z - debug: OData query on assets

2023-05-10T16:20:17.382Z - debug: OData query on scripts

2023-05-10T16:20:17.384Z - debug: OData query on templates

2023-05-10T16:20:17.389Z - debug: OData query on schedules

2023-05-10T16:20:17.576Z - debug: OData query on folders

2023-05-10T16:20:17.676Z - debug: OData query on components

2023-05-10T16:20:17.828Z - debug: OData query on data

2023-05-10T16:20:17.864Z - debug: OData query on tags

2023-05-10T16:20:17.894Z - debug: OData query on assets

2023-05-10T16:20:17.931Z - debug: OData query on scripts

2023-05-10T16:20:18.126Z - debug: OData query on schedules

2023-05-10T16:20:29.538Z - debug: OData query on components

2023-05-10T16:20:29.540Z - debug: OData query on templates

2023-05-10T16:20:29.546Z - debug: OData query on folders

2023-05-10T16:20:29.551Z - debug: OData query on data

2023-05-10T16:20:29.555Z - debug: OData query on assets

2023-05-10T16:20:29.572Z - debug: OData query on tags

2023-05-10T16:20:29.842Z - debug: OData query on scripts

2023-05-10T16:20:29.844Z - debug: OData query on schedules

2023-05-10T16:20:30.145Z - debug: OData query on settings

2023-05-10T16:20:30.852Z - debug: OData query on templates

2023-05-10T16:20:31.680Z - debug: OData query on profiles

2023-05-10T16:20:32.267Z - debug: OData query on profiles

2023-05-10T16:20:32.951Z - debug: OData query on profiles

2023-05-10T16:20:33.248Z - warn: Error when syncing fs journal, no worry, we will run again Timeout during waiting for file system, try it again later. stack=Error: Timeout during waiting for file system, try it again later.

at Object.rejectItemsWithTimeout (/app/node_modules/@jsreport/jsreport-fs-store/lib/queue.js:52:23)

at Timeout._onTimeout (/app/node_modules/@jsreport/jsreport-fs-store/lib/transaction.js:29:11)

at listOnTimeout (node:internal/timers:559:17)

at processTimers (node:internal/timers:502:7)

2023-05-10T16:20:33.707Z - debug: OData query on profiles

2023-05-10T16:20:34.493Z - debug: OData query on profiles

2023-05-10T16:20:35.247Z - warn: unable to load planned schedules, but no need to worry, we will retry, details:Error: Timeout during waiting for file system, try it again later.

at Object.rejectItemsWithTimeout (/app/node_modules/@jsreport/jsreport-fs-store/lib/queue.js:52:23)

at Timeout._onTimeout (/app/node_modules/@jsreport/jsreport-fs-store/lib/transaction.js:29:11)

at listOnTimeout (node:internal/timers:559:17)

at processTimers (node:internal/timers:502:7)

-

I apologize for the late response. I missed the notification.

When the import is finished, the instance which was doing the import writes to the

fs.journalinformation instructing other instances to reload data. This can be time-consuming with a lot of locking which can cause the timeout warns you noticed.Please try to increase the following configs

Lock the blob storage longer, because your import can take longer than default minute

extensions.fs-store-azure-storage-persistence.lock.leaseDuration=2mWait longer for file system

extensions.fs-store.persistenceQueueWaitingTimeout=3mRun background fs operation less frequently, the azure storage is not so cheap to reach as real file system

extensions.fs-store.compactionInterval=3m

-

Hi Jan,

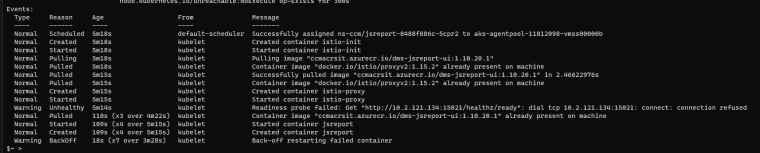

Thank you for the response. I tried to add the lock information to the config file, but now it fails trying to connect to a readiness probe. Is that a process internal to JSReport? If so, do we need to do additional configuration to our AKS cluster or the storage account?

"lock": {

"leaseDuration": "2m",

"enabled" : true,

"retry" : 100

}

-

That

healthz/readyis not jsreport url.

Get the pod logskubectl logsorkubectl logs --previous.

-

This is the complete previous log from the pod just after restart

2023-05-24T13:09:16.771Z - info: Initializing jsreport (version: 3.11.3, configuration file: jsreport.config.json, nodejs: 16.19.0)

2023-05-24T13:09:16.773Z - info: Searching for available extensions in /app/

2023-05-24T13:09:16.777Z - info: Extensions location cache not found, crawling directories

2023-05-24T13:09:17.195Z - info: Found 36 extension(s)

2023-05-24T13:09:17.234Z - debug: Writing extension locations cache to /tmp/jsreport/core/locations.json

2023-05-24T13:09:17.235Z - debug: Discovered 36 extensions

2023-05-24T13:09:17.319Z - info: Using extension authentication@3.4.0

2023-05-24T13:09:17.487Z - debug: Extension authentication@3.4.0 was disabled

2023-05-24T13:09:17.488Z - info: Using extension base@3.0.1

2023-05-24T13:09:17.493Z - info: Using extension child-templates@3.1.0

2023-05-24T13:09:17.495Z - info: Using extension cli@3.2.3

2023-05-24T13:09:17.497Z - info: Using extension components@3.3.0

2023-05-24T13:09:17.499Z - info: Using extension data@3.1.0

2023-05-24T13:09:17.500Z - info: Using extension express@3.7.1

2023-05-24T13:09:17.759Z - info: Using extension freeze@3.0.1

2023-05-24T13:09:17.761Z - info: Using extension fs-store@3.2.4

2023-05-24T13:09:17.861Z - info: Using extension handlebars@3.2.1

2023-05-24T13:09:17.863Z - info: Using extension import-export@3.2.0

2023-05-24T13:09:17.873Z - info: Using extension jsrender@3.0.0

2023-05-24T13:09:17.873Z - info: Using extension licensing@3.0.3

2023-05-24T13:09:17.892Z - info: Using extension localization@3.2.2

2023-05-24T13:09:17.893Z - info: Using extension npm@3.1.2

2023-05-24T13:09:17.897Z - info: Using extension pdf-utils@3.9.0

2023-05-24T13:09:17.905Z - info: Using extension reports@3.1.1

2023-05-24T13:09:17.909Z - info: Using extension tags@3.1.1

2023-05-24T13:09:17.912Z - info: Using extension text@3.0.0

2023-05-24T13:09:17.913Z - info: Using extension version-control@3.1.2

2023-05-24T13:09:17.932Z - info: Using extension assets@3.6.0

2023-05-24T13:09:17.943Z - info: Using extension authorization@3.3.0

2023-05-24T13:09:17.946Z - debug: Extension authorization@3.3.0 was disabled

2023-05-24T13:09:17.947Z - info: Using extension browser-client@3.1.1

2023-05-24T13:09:17.949Z - info: Using extension chrome-pdf@3.3.0

2023-05-24T13:09:17.952Z - debug: Chrome strategy is chrome-pool, numberOfWorkers: 1

2023-05-24T13:09:17.953Z - debug: Chrome custom launch options are args=--no-sandbox,--disable-dev-shm-usage, executablePath=/usr/lib/chromium/chrome

2023-05-24T13:09:17.953Z - info: Using extension docx@3.7.1

2023-05-24T13:09:17.956Z - info: Using extension fs-store-azure-storage-persistence@3.0.0

2023-05-24T13:09:18.202Z - info: Using extension html-to-xlsx@3.3.1

2023-05-24T13:09:18.292Z - info: html-to-xlsx detected chrome as available html engine

2023-05-24T13:09:18.292Z - info: Using extension pptx@3.4.0

2023-05-24T13:09:18.295Z - info: Using extension scheduling@3.0.3

2023-05-24T13:09:18.348Z - info: Using extension scripts@3.4.1

2023-05-24T13:09:18.349Z - info: Using extension static-pdf@3.0.1

2023-05-24T13:09:18.350Z - info: Using extension studio@3.9.2

2023-05-24T13:09:18.469Z - info: Using extension xlsx@3.4.0

2023-05-24T13:09:18.499Z - info: Using extension public-templates@3.0.0

2023-05-24T13:09:18.500Z - debug: Extension public-templates@3.0.0 was disabled

2023-05-24T13:09:18.501Z - info: Using extension sample-template@3.2.1

2023-05-24T13:09:18.503Z - info: Using extension studio-theme-dark@3.0.1

2023-05-24T13:09:18.505Z - info: Code sandboxing is disabled, users can potentially penetrate the local system if you allow code from external users to be part of your reports

2023-05-24T13:09:18.505Z - info: Using general timeout for rendering (reportTimeout: 60000)

2023-05-24T13:09:18.505Z - info: Using fs provider for template store.

2023-05-24T13:09:18.522Z - info: fs store is persisting using azure-storage for /app/data

2023-05-24T13:10:08.783Z - error: Error occurred during reporter init: StorageError: The value for one of the HTTP headers is not in the correct format.

RequestId:a796de99-f01e-004f-6f41-8e81c8000000

Time:2023-05-24T13:10:07.7904149Z

at Function.StorageServiceClient._normalizeError (/app/node_modules/azure-storage/lib/common/services/storageserviceclient.js:1205:23)

at BlobService.StorageServiceClient._processResponse (/app/node_modules/azure-storage/lib/common/services/storageserviceclient.js:751:50)

at Request.processResponseCallback [as _callback] (/app/node_modules/azure-storage/lib/common/services/storageserviceclient.js:319:37)

at Request.self.callback (/app/node_modules/request/request.js:185:22)

at Request.emit (node:events:513:28)

at Request.<anonymous> (/app/node_modules/request/request.js:1154:10)

at Request.emit (node:events:513:28)

at IncomingMessage.<anonymous> (/app/node_modules/request/request.js:1076:12)

at Object.onceWrapper (node:events:627:28)

at IncomingMessage.emit (node:events:525:35)

StorageError: The value for one of the HTTP headers is not in the correct format.

RequestId:a796de99-f01e-004f-6f41-8e81c8000000

Time:2023-05-24T13:10:07.7904149Z

at Function.StorageServiceClient._normalizeError (/app/node_modules/azure-storage/lib/common/services/storageserviceclient.js:1205:23)

at BlobService.StorageServiceClient._processResponse (/app/node_modules/azure-storage/lib/common/services/storageserviceclient.js:751:50)

at Request.processResponseCallback [as _callback] (/app/node_modules/azure-storage/lib/common/services/storageserviceclient.js:319:37)

at Request.self.callback (/app/node_modules/request/request.js:185:22)

at Request.emit (node:events:513:28)

at Request.<anonymous> (/app/node_modules/request/request.js:1154:10)

at Request.emit (node:events:513:28)

at IncomingMessage.<anonymous> (/app/node_modules/request/request.js:1076:12)

at Object.onceWrapper (node:events:627:28)

at IncomingMessage.emit (node:events:525:35)Here is the jsreport.config.json:

{

"store": {

"provider": "fs"

},

"extensions": {

"fs-store": {

"persistence": {

"provider": "azure-storage"

}

},

"fs-store-azure-storage-persistence": {

"accountName": "x",

"accountKey": "xx",

"lock": {

"leaseDuration": "2m",

"enabled" : true,

"retry" : 100

}

},

"studio": {

"enabled": true

},

"express": {

"enabled": true

}

}

}Anything in the configuration I can do to help this?

Thanks,

-

Try using number in

leaseDuration:120

-

Thank you for the suggestion, however that was not the culprit. I am getting the same error message

-

I apologize. I've read the docs now and the maximum

leaseDurationin azure is 60s which is the default. So we can't change this. Please skip the lock options.If the other configs won't help. Please check if you have the storage in the same Azure region as the app.

Also azure provides low-latency premium storage, maybe it will work faster and eliminate the problem.

Another option is to map some file system azure provides and use plain jsreport fs store.