Import timeout

-

Hi,

I'm using JSReport enterprise in Docker environment.

I'm using default values for storage (fs).I made an export in the UI. I get a zip file of 10,8M.

At this point, it's OKNow, when I want to import it again, I get a timeout after 2 minutes. The connection simply drops.

Request has been terminated Possible causes: the network is offline, Origin is not allowed by Access-Control-Allow-Origin, the page is being unloaded, etc. crossDomainError@http://xxx.xxx.xxx.xxx:5488/studio/assets/client.js?e33dc3808fd7cf980ebb:32:7719 onreadystatechange@http://xxx.xxx.xxx.xxx:5488/studio/assets/client.js?e33dc3808fd7cf980ebb:32:85062 minutes look a bit huge regarding the data.

I'm using JSReport 2.4.0-full, also tried 2.5.0-full with no luck.

Here is my config file content :{"httpPort":5488,"allowLocalFilesAccess":true,"store":{"provider":"fs"},"blobStorage":{"provider":"fs"},"templatingEngines":{"timeout":10000},"chrome":{"timeout":30000},"extensions":{"scripts":{"timeout":30000}}}Can you give me advices ?

-

Hi, this happens also in your local pc environment? Or it is just specific to your production environment?

Are you able to share the export zip somewhere or email it to me?

-

This doesn't happen in my local pc. It's specific to my staging and production environment.

They are using docker (With Rancher 1.6) with an NFS Server.

Connectivity between servers and NFS is OK since all other services are working well.

Also, when I use MongoDB (which is also persisted in NFS) it doesn't happen.

-

Please try this configuration

"extensions": { "fs-store": { "syncModifications": false } }

-

Hi Jan,

Thanks, Just tried and the same thing is happening

I forgot to mention some data are added, but I don't know if all data is there :/

-

Hm. We will need to try to isolate the source of the problem. We don't know if it is just slow because of network fs. If it is a general problem with import. Or some kind of other problem.

What if you import a smaller export, does it work?

How long it takes to edit a bigger entity in the studio?

What if you configure the template store to connect to mongodb, does it import the whole package?

-

Actually, when I don't persist data on NFS server (no volume on docker container) it take a minute to import data.

I benchmarked communications between container and NFS Server :

root@ac2bf523cd00:/app# time dd if=/dev/zero of=data/testfile bs=16k count=128k 131072+0 records in 131072+0 records out 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 18.434 s, 116 MB/s real 0m19.292s user 0m0.029s sys 0m2.351sI don't have exact data on editing big entities but yet it's pretty fast.

Also just tried to import 5.4Mo file and still the same issue

I'll give a shot at MongoDB

-

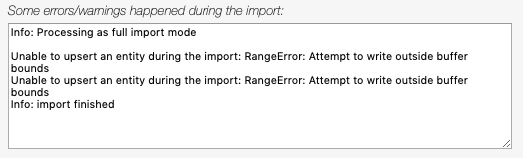

Well, here is the import with MongoDB 3.6

-

Not sure what are the mentioned errors. Would you be able to share the import package?

-

Ok so I just tried again on my computer.

The same issue. I really think that it's just a import performance issue.I not allowed to send the import package as It contain many sensitive data.

Through I tried to delete all sensitive data and import the file, and it works !!! But yes, the import package is only 100ko now so...

Didn't found any workaround for now.. Still the same issue, but now I'm sure it's not related to NFS

-

It seems to be related to your particular export package if you say it doesn't work on your local pc as well.

However, I've tried the 10mb export package and it imports in 1s.

I believe it is not related to the actual content of your templates and data. You should be able to replace characters in your templates/data with some 'a' values and share the export with me.

-

Hi :)

It's been a while but we're still facing the problem.

I just sent you a zip file by mail to show you that even with ~500kb of data it breaks

-

Performance problem was solved here

https://github.com/jsreport/jsreport-authorization/commit/57a93e58f13d3f84f59eada09223c1f488bb469bWill be part of the next release.

The current workaround is to temporarily disable authentication before import in case it is timing out.

-

Thanks a lot for your help ! :)

I tried disabling authentication and it worked great. Still a bit slow but not crashing which is great ! :)Waiting for the new release :)

-

Hi !

Just tried your fix with the new 2.6.1 !

But I'm still facing the issue :(If I remove authentication it works but if let it enabled it crashes during import

-

Hm. Also on your local?

It finishes for me within 3s and with disabled auth in 2s.

-

Same on my local :/

-

If I remember well, you use Windows, right ?

If so, have you tried on Linux ? Maybe it's os related ?

I'll try on Windows

-

We may try to add some more optimizations later.

However we see no issues on various OSX, Ubuntu, Windows machines.

-

I'm facing the same issue on Windows :/

I'll search to see if I can see where this come from